If you’re an owner or administrator of a WordPress website, you may have come across the setting in your website’s backend that says: “Discourage search engines from indexing this site.”

But what does this mean exactly? What happens when you hide your site from search engines, and why would you want to do this?

In this article, we will explain all this to clear things up for you. Moreover, we will provide all the knowledge you need to responsibly control the visibility of your entire website or just some specific pages.

When you create a website, considering how search engines index and rank your content is vital. Search engines employ automated programs known as spiders, robots, or crawlers to scan the web and index website content. By default, search engines will index your website unless you instruct them otherwise.

What Happens When You “Discourage Search Engines from Indexing This Site”?

Before we start, let’s clarify that saying that a “website or web page is being indexed” is different than saying it is ranking (being listed) in Google results. Indexing is the process where the search engine finds content, stores and parses it, and then uses this information to decide in what position it will be listed in search results, which is its ranking.

When you discourage search engines from indexing your site, you are telling search engines not to crawl your website. This means your web pages won’t appear in search engine results pages (SERPs) and search engines will not find and index your website’s content.

However, selecting this option

- does not guarantee that search engines will not index your site. Reputable search engines like Google and Bing will respect this setting, but others may not.

- does not mean you block access to your website. You still allow access to your website directly via its URL.

- only prevents search engines from indexing your site; it does not prevent other websites from linking to your content.

If you want to be sure that your website is not visible to search engines, you will need to apply the right measures, like password protection or advanced robots.txt rules. But before we go there, let’s see how you can check whether your website is visible to search engines and why you would want to hide it from them.

How to Check Your Website’s Visibility to Search Engines

If you’re not sure whether you’ve prevented the search engines from indexing your site, don’t worry! There are a few easy ways to check.

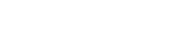

The obvious first choice is to login to your WordPress admin and check whether you’ve selected the option to “Discourage search engines from indexing this site”.

Go to your WordPress dashboard and click on “Settings” and then “Reading.” If the box is checked, search engines will be discouraged from indexing your site.

Another way to check is to look at your site’s robots.txt file. To do this, simply go to your website and add “/robots.txt” to the end of the URL (e.g. www.yourwebsite.com/robots.txt).

If you see the below displayed, then this means that search engines should not crawl your WordPress website:

User-agent: *

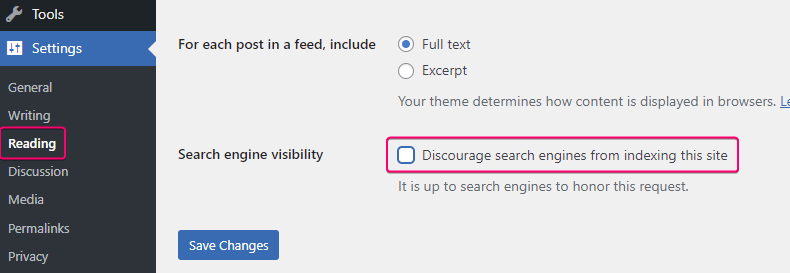

Disallow: /You can also check your site’s meta tags to see whether they include a tag that discourages search engines. To do this, right-click on your website and select “View Page Source”.

Then, search for meta name='robots' in the code. If the tag includes noindex, nofollow, then search engine spiders are not scanning your content.

What is a “noindex” tag?

The noindex tag prevents a search engine from adding the page to the search index. That means that the page will not be visible in the search results.

The tag can be applied on the whole website or partially on specific -groups of- pages.

What is a “nofollow” tag?

The nofollow tag has impact on the links a page contains. When you use this tag on a link, search engines may still crawl the linked page, but the linked page won’t receive the same SEO value from the link as it would if the “nofollow” attribute were not present.

The tag can be applied on the whole website or on specific links.

Reasons to Stop Search Engines from Crawling Your Website

It is crucial to allow search engines like Google to discover your WordPress website and index its content. This indexing helps improve your site’s visibility and ranking in search engine results, driving organic traffic. However, there are instances when website owners may want to prevent search engines from crawling their WordPress site. Let’s see why…

Try our Award-Winning WordPress Hosting today!

By allowing search engines to crawl a site, information such as user data or login credentials could potentially be exposed. So;

- One reason to stop search engines from crawling a WordPress site is to protect sensitive information, personal data, and confidential documents. This helps to safeguard the privacy and security of individuals and organizations.

- An unfinished website can also be a reason. If your website is under construction, you might not want visitors to access it until it’s complete. Discouraging search engines from crawling a testing environment prevents them from accidentally indexing unfinished content.

- Limiting exposure of your website can reduce the risk of hacking attempts and unauthorized access. If certain pages or directories should remain hidden, this measure adds an extra layer of security.

- You may want to restrict content to specific users or groups. Controlling your content distribution by keeping certain content away from search engine results can be very useful in such cases.

- You can avoid duplicate content issues. Maybe you want to protect your content from being copied from other websites and claim it as their own. If Google discovers such a case, it considers this plagiarism, and you may be penalized. This has a negative impact on your rankings.

- Site performance can also be a reason to hide your site from search engines. If search engines do not crawl your content, the server loading time is reduced. This improves the loading speed, which has a high impact on the performance of your WordPress site.

- You can avoid index bloat. This term refers to the cases where your website may contain an excessive number of low-quality, redundant, or irrelevant pages that the search engine has to crawl. This can harm a site’s SEO, because search engines have to spend extensive time to scan all these unnecessary pages instead of delivering relevant results to users.

That is why it is important to audit and clean up your site’s indexing regularly. Only then you will be sure it’s lean and focused on high-quality pages.

No matter which of the above scenarios you relate to, it’s important to weigh the advantages and disadvantages of hiding webpages from search engines in terms of both SEO and user experience.

How to Prevent Search Engines from Crawling Your Entire Website

There are several routes to choose from. As you’ve already guessed, one way is from inside the admin area, like we mentioned before.

By Using the WordPress Built-In Feature

If you’re using WordPress and want to ask search engines not to crawl your site, you can use the built-in feature to discourage search engines from indexing your site. Here’s how:

- Log in to your WordPress dashboard and click on “Settings” and then “Reading.”

- Look for the option that says “Search Engine Visibility” and check the box that says “Discourage search engines from indexing this site.”

- Click “Save Changes” at the bottom of the page.

We remind you that this feature may not prevent all search engines from indexing your content. You might need to edit your robots.txt file if you want to make sure that all search engines do not index your site.

By Editing robots.txt

The robot.txt file offers plenty of capabilities, including a way to entirely hide your website from all search engines and not depend on whether they will honor this request.

In order to edit this file, you have to connect to your website’s server using FTP (File Transfer Protocol), sFTP (secure FTP), or through the command line or a file editor on your server, whichever your host provides. On shared hosts the robots.txt file is usually located in the public_html folder or a folder with your domain name. If it doesn’t exist, you can create one using a plain text editor. Once you locate the robots.txt file, open it with your favorite editor and add the directives that you want to use. To ask search engines not to crawl your entire site, you can use the following code:

User-agent: *

Disallow: /This code tells all search engine crawlers (User-agent: *) not to access any pages on your site (Disallow: /). If you want to request from a specific search engine to stop crawling your entire website, you should define it in the code. For example:

User-agent: Googlebot

Disallow: /The same way you can define any other agent. Here are some of the popular ones:

| User Agent Name | Search Engine |

|---|---|

| Googlebot | |

| Bingbot | Bing |

| YandexBot | Yandex |

| DuckDuckBot | DuckDuckGo |

| Baiduspider | Baidu |

| Slurp | Yahoo! |

Once you’ve applied your edits, save the robots.txt file and verify the changes by visiting www.YOURDOMAIN.com/robots.txt.

By using the “X-Robots-Tag” header

The “X-Robots-Tag” header can be used to apply any rule that can be used in a robots meta tag. So here is how to use it to apply the noindex and nofollow rules.

On an WordPress installation you will have to edit the .htaccess file and insert the following lines of code:

<Files "*">

Header set X-Robots-Tag "noindex, nofollow"

</Files>The above code sets the rule for the whole website. To do this for a specific page, insert:

<Files "your-page.html">

Header set X-Robots-Tag "noindex, nofollow"

</Files>

And to apply this to a directory, insert:

<Directory "/path/to/your/directory">

Header set X-Robots-Tag "noindex, nofollow"

</Directory>

After making changes, you have to restart your Apache server to apply the configuration.

Hide Parts of Your Website from Search Engines

Now let’s see how you can use it to stop search engines from indexing certain pages or content types only.

By Editing robots.txt

The lines of code below will block all web crawlers (User-agent: *) from accessing the “directory-name” and its contents.

To prevent crawling of a specific directory you should insert this lines:

User-agent: *

Disallow: /the-directory-name/To block a specific page or file, use this code:

User-agent: *

Disallow: /path-to-file/file-name.extBy Installing a WordPress Plugin

There are many popular SEO plugins that provide a feature that configures the search engine visibility of your WordPress website content. Here, we will see how you can do this with the help of the Yoast SEO plugin.

This popular plugin, among its great SEO features, provides a quick and easy way to stop search engines from indexing either a single page or a type of post.

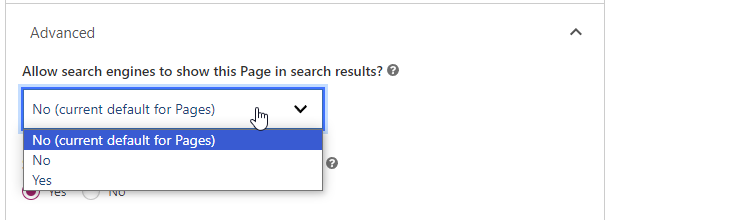

After you install and activate it, go to any page admin screen and, in the Yoast Settings under the editor, find the “Advanced” accordion tab.

There, you can select whether you let search engines index or follow links on this Page.

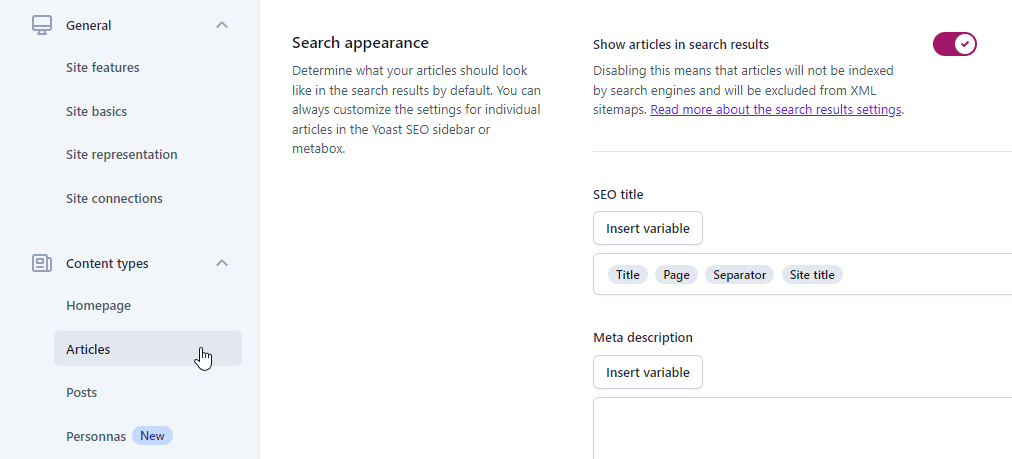

You can do the same for post types. Under Yoast SEO -> Settings, find the “Content types” accordion menu.

Then, select the post type you would like to hide from search engines and configure the “Show articles in search results” option as you wish.

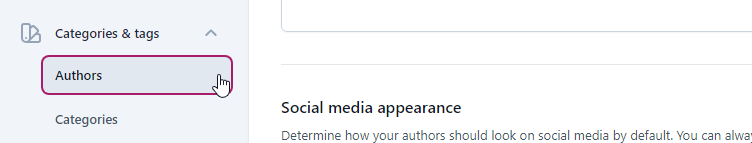

The same goes for the taxonomies. Under Yoast SEO -> Settings, find the “Categories & tags” accordion menu.

Select your post type taxonomy (like Authors as an example) and, like before, uncheck the “Show pages in search results” checkbox.

We would recommend to check the rest of Yoast’s settings as well, located in the “Advanced” accordion tab. There, you can optimize crawling by removing unwanted metadata or hide your archives from the search results.

Potential Drawbacks of Preventing Page Indexing

Let’s talk about some reasons why you should be careful when deciding not to let search engines show your web pages in their search results. This is important for your website’s visibility and how it performs on the internet.

If search engines can’t find your web pages that has valuable information for your business, people won’t either. It is no surprise that this will consequently limit the organic traffic and exposure of your website, which translates to poor SEO. When you hide your content, search engines can’t see what you’re offering, and that can make your SEO worse.

Valuable information on your website could be something that’s really helpful or interesting to your target audience. If search engines can’t find it, people who are searching for that information won’t find it either. This can cause missing out on opportunities of brand awareness, conversions, monetization or generating leads.

So, in a nutshell, be cautious when deciding to prevent search engines from indexing your pages. It’s like locking away valuable information that could help your website grow and succeed.

Frequently Asked Questions

How to Hide a WordPress Page from Google Search?

Here are two common routes you can follow:

Edit the robots.txt File:

- Connect to your website’s server using FTP.

- Locate the robots.txt file (in the public_html folder or a folder with your domain name).

- If it doesn’t exist, create one using a plain text editor.

- Add the directive to block all search engines from crawling your entire site:

User-agent: Googlebot

Disallow: /your-page/

- Save the file and verify the changes by visiting www.YOURDOMAIN.com/robots.txt.

Use a WordPress Plugin (e.g., Yoast SEO):

- Install and activate the Yoast SEO plugin.

- Go to the page or post you want to hide.

- In Yoast Settings under the editor, find the “Advanced” tab.

- Choose whether to let search engines index or follow links on this page.

How to Hide a WordPress Site from Search Engine Results?

You can accomplish this by following either of these options:

Using the Built-In WordPress Feature

- Log in to your WordPress dashboard.

- Navigate to “Settings” and then “Reading.”

- Find the “Search Engine Visibility” option and check the box that says “Discourage search engines from indexing this site.”

- Save the changes.

Please note that this built-in feature may not prevent all search engines from indexing your content, so it’s advisable to edit the robots.txt file for more comprehensive control.

Editing the robots.txt File

- Connect to your website’s server using FTP.

- Locate the robots.txt file, typically in the public_html folder or under your domain name folder. Create one if it doesn’t exist.

- Open the robots.txt file with a text editor and add the following code to block all search engine crawlers from accessing your entire site:

User-agent: *

Disallow: /

Conclusion

Managing your WordPress website’s visibility to search engines is a vital aspect of online presence. Whether you want to protect sensitive information or keep your work-in-progress under wraps, we’ve discussed some popular and effective ways to do this.

Hopefully, you are now capable of applying this and can now control what search engines “see” in line with your requirements.

Start Your 14 Day Free Trial

Try our award winning WordPress Hosting!